It’s time to change the narrative around ROI

May 31, 2018

There’s no denying the importance of learning and development. In 2017 alone, approximately $96.3 billion was spent on L&D worldwide. However, as learning professionals, we know there are always strings attached to that number.

No matter your organization’s L&D budget, you will have stakeholders asking for evidence of a return on their L&D investment. In fact, return on investment (ROI) is one of the biggest struggles plaguing learning managers today. After all, it’s not just the stakeholders who want to see evidence of results – most L&D professionals are results driven as well.

The issue comes with the fact that L&D is among the most challenging business divisions when it comes to demonstrating ROI. But what if it didn’t have to be so challenging? What if we’re approaching our ROI measurements all wrong?

ROI measurement today

There are many tools used by modern learning professionals to measure the ROI of their initiatives and, while each of them offers value, they’re often insufficient – particularly when used in isolation.

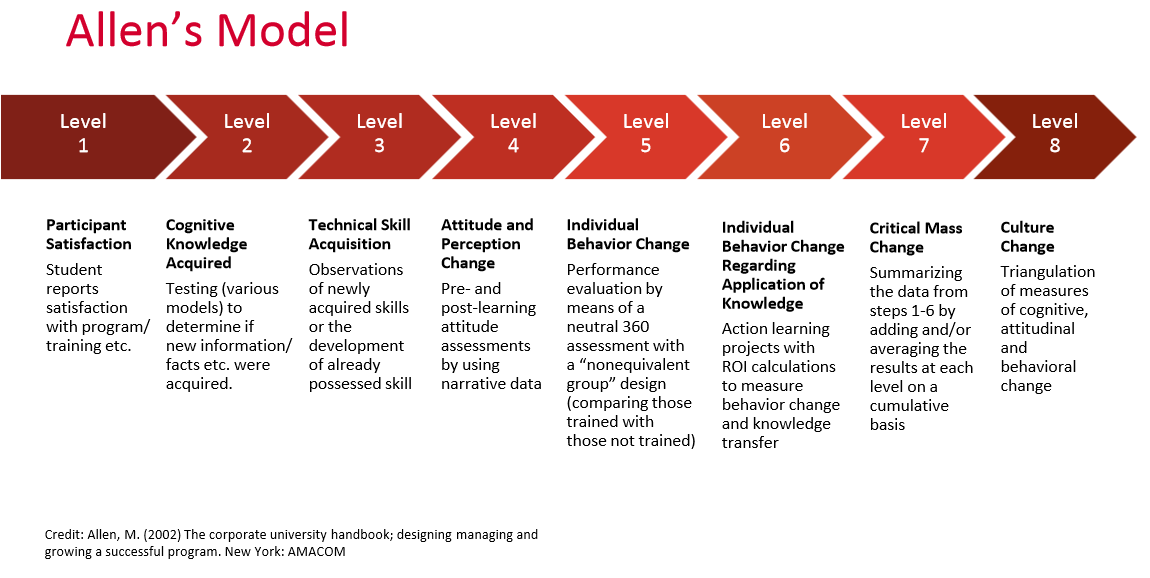

One of the most popular tools is Allen’s Model, which measures the impact of a learning program across eight levels:

- Level 1 determines whether students were happy or dissatisfied with the learning program (i.e. participant satisfaction)

- Level 2 determines the degree to which cognitive knowledge was acquired, usually established through testing

- Level 3 determines the degree to which technical skills were acquired, usually established through observation

- Level 4 is attitudinal and assesses learners’ reactions to and beliefs about their pre- and post-learning

- Level 5 determines the degree of behaviour change, usually established through observational feedback

- Level 6 determines whether a learner can show he/she is capable of using the learning and knowledge

- Level 7 summarises the previous six levels by averaging out the learning on a cumulative basis

- Level 8 is an attempt to triangulate the change and measure its impact on the culture and outcomes of the organization

While a useful tool, Allen’s Model is incredibly complex and very hard to scale across a large number of learning initiatives. In other words, it’s not a great option for small teams who already feel as if they’re in over their heads.

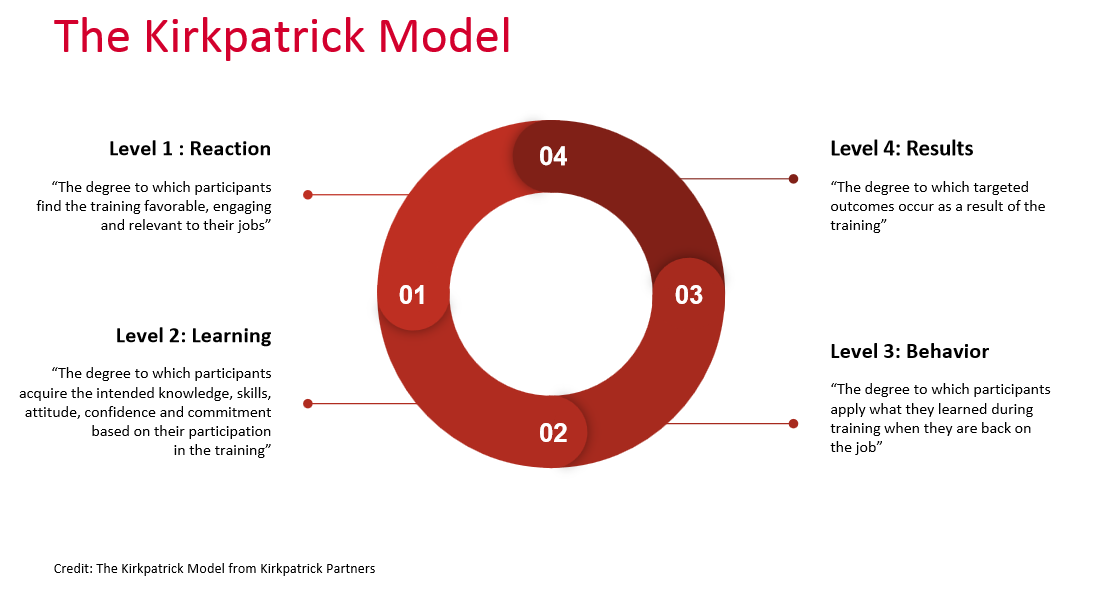

A similar popular tool is the Kirkpatrick Model, which uses four levels rather than Allen’s Model’s eight. Here, we’ll break down the different levels and their pros and cons.

Level 1 involves measuring learners’ reaction and acceptance. This is typically done using simple evaluations like questionnaires or “smile sheets”.

Not only are these evaluation methods cost-effective, they often have high distribution and completion rates as they’re typically issued in the learning setting. However, the answers are usually quite generous. They may also indicate satisfaction with factors we’re not necessarily interested in from an ROI point of view.

Level 2 involves measuring learning through post-learning tests. This could be anything from simple knowledge checks, like multiple choice tests, to comprehensive assessments.

These tests can be very useful in giving a basic understanding of learning success, particularly when combined with pre-tests. However, they are limited in that they only tell you about what a learner is able to recall immediately following the learning.

As we know, knowledge retention tends to decrease rapidly following learning, and a positive test result might not actually indicate the learner’s ability to apply their learning in the days, week or months ahead.

Level 3 involves measuring whether an outside party can observe a changed behaviour following learning. Typically, an objective observer will rate the application of a learned skill, such as in the case of employee/manager assessments or post-training evaluations.

It’s hard to ignore that there are many challenges with this form of evaluation. If you are able to get managers or other observers to agree to participate, you need to ensure that they fully understand what the learners were trained on and how they can identify positive changes. T

These evaluations are also typically hard to scale (as they require a great deal of time and manpower) and there will almost always be an observation bias at play.

Finally, level 4 measures the impact of your learning initiative. It involves determining whether the training actually taught the right things, whether the training was applied, and whether that had the intended outcome.

This is the most important metric to measure and is all about tying your learning back to your organization’s wider objectives. Even if your questionnaires, tests, and observations are positive, a lack of proof of impact indicates your learning program wasn’t a success.

Of course, Allen’s Model and the Kirkpatrick Model are not the only two options out there when it comes to evaluating a learning initiative’s success; however, the above issues remain in most evaluation tools.

Our fascination with causation

The issue with these tools and the way that we approach ROI is that so much focus is being placed on causation.

Learning professionals get caught up in formulas and figures to prove a direct link between learning and organizational success, despite the fact a direct link almost never exists.

By focusing on a need to “prove” that an L&D investment produced a specific result or contributed to an organizational objective, we completely ignore the fact that there is a multitude of factors outside of our control.

Luckily, there is a better way – but it involves a shift.

A multi-faceted learning measurement model

Of course, we can’t simply ignore ROI. Instead, it’s up to L&D professionals to shift the dialogue around ROI from one focused on causation to one focused on correlation.

How? Through data.

It has never been clearer: L&D needs to be a data-driven function and connect its work to existing, valued organizational metrics.

To achieve this, Karen Hebert-Maccaro of O’Reilly Media suggests we shift to a more sophisticated, multifaceted learning measurement model – one that acknowledges the fact that learning is a human experience, with many factors at play.

Her suggested model is founded on correlation (not causation), exploration and monitoring.

Correlate

A correlation-based framework allows L&D to draw direct lines between learning initiatives and business priorities. It is up to the L&D department to look at those priorities, ascertain their relevant human capital needs, and determine how it can facilitate those needs through learning initiatives.

By following this framework, learning professionals are able to give greater focus to determining a correlation between learning engagement and whether the organization’s human capital needs are being met.

The same correlation-based approach can be used to evaluate data that already exists within your organization. For example, you might look to see whether your most engaged learners are also your best salespeople.

Explore

To demonstrate learning ROI in the most effective way, we also need to be selective in the measurements we do.

While it is crucial for learning professionals to measure post-learning behaviour and its impact on the business, it is equally crucial to focus efforts on the most important programs – particularly if your organization offers 100s or 1000s of programs every year.

These will often be high-investment, low-frequency learning initiatives.

Monitor

Finally, it is still important to ensure quality control by monitoring your learning initiatives as they progress through questionnaires and pre/post-testing.

However, under a multi-faceted learning measurement model, it is recommended that data collection in this area be as limited and precise as possible. Hebert-Maccaro suggests using smile sheets but limiting them to a handful of important questions, such as:

- How effective was the instructor?

- Will you use what you learned over the next 30 days?

- What specifically will you use?

- What was missing?

She also suggests we pay greater attention to metrics that help us understand learner behaviour and how best to alter programs to meet those behaviours. This would include looking at:

- Linear (structured) vs. non-linear learning (jumping in and jumping out)

- Deepening (focused learning) vs. broadening (various topics)

- What learners are learning, searching for and not finding (i.e. topical analyses and trends)

- Industry comparisons – what your competitors are doing and how their learners are engaging

Learning Imperative

Hebert-Maccaro’s model also uses an underlying justification – a learning imperative – as a foundation for our commitment to a new narrative around ROI:

“Learning is the “Next Economy” superpower and data re: talent attraction, retention and workforce upskilling and reskilling support a core “table stakes” argument”

Learning is not what it once was. The average shelf life of a skill is estimated at approximately five years. New job titles are being created all the time and talent is becoming harder to find and retain. Millenials, in particular, are placing a greater emphasis on learning and growth opportunities when selecting and deciding to remain at a company. To make matters worse, turnover is incredibly expensive – anywhere from six to nine months of an individual’s salary.

In short, it is our responsibility to demonstrate to stakeholders that learning is an absolute necessity to stay relevant and competitive in today’s market – regardless of your industry.