Predictive Learning Analytics Reduce Scrap Learning: It’s Not as Difficult as You Think

Oct 03, 2017

At Earthly, it’s not every day that we learn something we feel can change the way learning management works. But, sometimes we do come across new methodologies that really shake up our way of thinking.

We recently had the opportunity to take part in a webinar hosted by Ken Phillips of Phillips Associates. Over the past two and a half years, Ken has developed what he calls the Predictive Learning Analytics methodology: a way for businesses to reduce scrap learning and create more effective learning programs.

In our line of work, we see many methodologies that promise to change learning – some great, some not. We think this is one of the good ones. Here’s why.

The problem: Scrap learning

The problem that Predictive Learning Analytics aims to fix is scrap learning. You may have come across the term before. Ken defines scrap learning as “the gap or difference between learning that is delivered and learning that is applied back on the job”.

If you are familiar with learning, you know there are always people who fail to apply what they’ve learned, regardless of how good of a training program you have built. There are others with great intentions, who end up forgoing any new information in the long run.

About 65% of learners fall in this category: those who try to apply their newly learned skills on the job, but eventually revert back to their old ways. When you include the learners who make zero attempt to apply their learning, you end up with anywhere from 45% to 85% of learning that ends up as scrap.

The solution: The Predictive Analytics methodology

To solve the scrap learning program and to increase learning transfer, Ken has developed a standardized methodology based on a mathematical algorithm. It is a way of looking into the future at the end point of your learning program and making a prediction about learner outcomes and actions, giving you a clear idea of what improvements need to be made to change them for the better.

We know – it almost sounds too good to be true. But stick with us.

Ken’s methodology makes predictions of which learners are most or least likely to apply their learning on the job. The algorithm also predicts which managers are likely to do a good or poor job of supporting their learners’ training.

The algorithm evaluates three considerations that contribute to training transfer – learning program design, learner attributes, and the work environment – each broken down into factors:

The algorithm evaluates three considerations that contribute to training transfer – learning program design, learner attributes, and the work environment – each broken down into factors:

Program design factors

- New information: whether learners are actually learning something new

- Relevance: whether learners can find a connection between the training program content and their own job

- Investment: whether learners feel what they have learned will help them increase their career opportunities

- Key department business metrics: whether the application of the program’s information will affect identified metrics for business success

Learner attribute factors

- Personal motivation: whether learners are driven to apply what they’ve learned

- Confidence: whether learners feel satisfied with their ability to apply what they’ve learned

- Reflection: whether learners have the chance or willingness to reflect on what they’ve learned before applying it

- Opportunity: whether the learners look forward to learning challenging new things

Work environment factors

- Manager engagement: whether managers actively engage learners, post-program, about what they’ve learned

- Colleague support: whether learners work colleagues give post-program support when applying new things learned

- Application opportunity: whether learns have the immediate opportunity to apply what they’ve learned

How it works: A step-by-step look

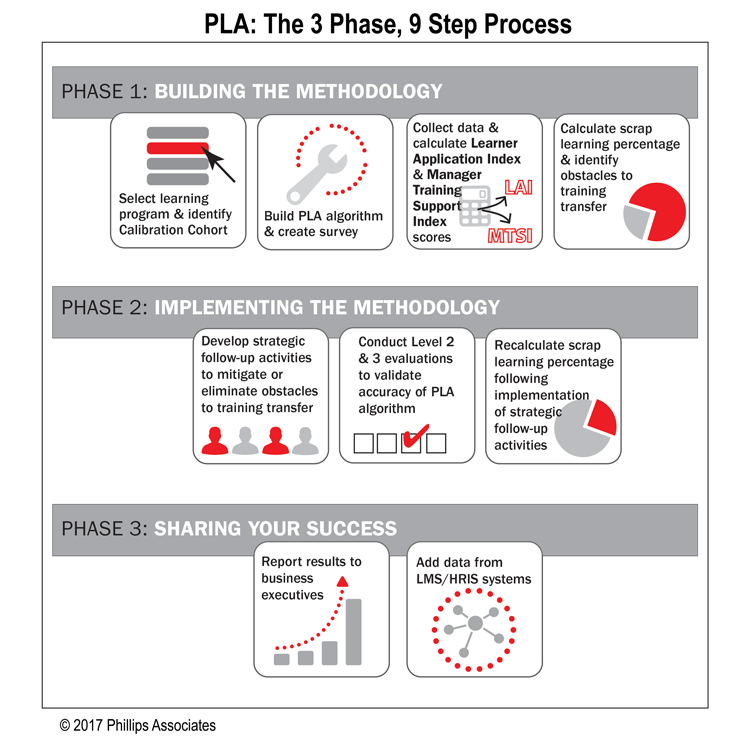

In his webinar, Ken took us through a step-by-step approach to applying the methodology. Broken down into two phases, Predictive Learning Analytics begins the same all analytics programs do: with data collection and analysis. The key, however, is phase two: implementing solutions that actually [mitigate] scrap learning and [increase] managerial support.

Here’s how it’s done.

Phase 1

The first phase of Predictive Learning Analytics starts with selecting a learning program that you wish to analyse and improve. Ken suggests you select a program with a high profile and an anticipated large number of learners. Within those learners, you select about 40 to 60 that become your ‘collaboration cohort’. These are the learners for which you will collect data.

Using the methodology, you build an algorithm and create a survey that is specific to that program and its content. The survey’s questions are designed around the 11 factors we described above.

Once you’ve collected your data from the learners, it can be aggregated to make predictions based on each learner’s likelihood to apply what they’ve learned (called the Learner Application Index or the LAI). The data can also give you an idea of each manager’s Manager Training Support Index score (MTSI). Those with a positive MTSI are predicted to do a good job providing post-training support for their learners, while those with a negative MTSI are in need of some extra assistance.

Your final step in this first phase is to calculate your program’s specific scrap learning percentage. This is calculated about 30 days after the program has been completed by asking more questions of your learners and asking them (a) what percentage of program material they applied back on the job, (b) how confident they are in their percentage estimate, and (c) the obstacles they faced in applying what they’d learned. This insight will set you up to identify exactly which obstacles your program is facing when it comes to training transfer.

Phase 2

Now that you have all this useful data, it becomes important to act on it. This phase is what Ken refers to as ‘solution implementation’.

This phase of the Predictive Learning Analytics method is all about developing strategic post-program activities that will help you eliminate the obstacles to training transfer that your learners identified. In particular, you consider those with low LAI and low MTSI scores and identify ways to improve them.

Some activities that Ken identifies include sending post-program e-mail tips to learners, establishing a space where learners can comfortably apply their learning in private, and inviting managers and executives to participate in the learning.

After implementing some of these initiatives, it’s time to test out the new-and-improved training program with a fresh group of learners. The goal is to see an overall improvement, with reduced scrap learning and increased learning transfer.

The benefits

While the Predictive Learning Analytics method certainly involves a significant time investment and a lot of strategic thinking, it’s undeniable that the benefits make the added effort worthwhile.

By reducing scrap learning and increasing training transfer, your organisation benefits by:

- Wasting less time and money on learning that is never actually applied

- Targeting the most at risk learners and managers and discovering ways to effectively increase their skills

- Establishing a completely objective way to compare the quality of your learning programs

- Creating a learning program that looks more credible to your key stakeholders

- Enhancing your reputation among your L&D colleagues

In short, it’s easy to see why we are so impressed with the Predictive Learning Analytics methodology. To learn more about the methodology and how Earthly can help you increase the effectiveness of your L&D efforts, get in touch.